Introduction: The Crucial Role of User Acceptance Testing (UAT)

User Acceptance Testing (UAT) stands at the crossroads of project success. It’s the final frontier where software meets its true audience—the end-users. As business leaders, decision-makers, and technology enthusiasts, we recognize that UAT is more than a mere checkbox in the development process. It’s the litmus test that determines whether our digital solutions will thrive or falter in the real world.

The UAT Conundrum

Imagine this: after months of meticulous development, countless lines of code, and sleepless nights, your team unveils the shiny new software. The stakeholders applaud, the champagne flows, and the project seems like a resounding success. But then reality hits—the users encounter glitches, struggle with unintuitive interfaces, and curse the very system they thought was flawless.

This scenario is all too common. UAT bridges the gap between theory and practice, between the developer’s vision and the user’s experience. Yet, it’s often treated as an afterthought, a hurried process squeezed into tight schedules. And therein lies the conundrum: how can we ensure that UAT receives the attention it deserves?

The Silent Saboteurs

Let’s delve into the silent saboteurs—the pitfalls that can undermine UAT effectiveness:

- Scope Creep: UAT scope often balloons unexpectedly. New features sneak in, and suddenly, the testing matrix expands exponentially. The result? Exhausted testers, incomplete coverage, and missed defects.

- Assumption Bias: We assume that users will intuitively understand our software. But assumptions breed blind spots. UAT must challenge these assumptions, uncovering usability issues that might otherwise remain hidden.

- Regression Neglect: As we focus on shiny new features, regression testing—the safeguard against unintended side effects—can fall by the wayside. Ignoring regression risks jeopardizes system stability.

- User Diversity Oversights: UAT often involves a select group of users, but what about the outliers—the ones with unique workflows or accessibility needs? Neglecting their perspectives can lead to exclusionary software.

- Inadequate Data Migration Testing: When transitioning from legacy systems, data migration is critical. Yet, it’s often relegated to a minor role in UAT. Inaccurate data can cripple even the best-designed software.

The Promise of Effective UAT

But fear not! Effective UAT holds immense promise. It’s not just about finding bugs; it’s about validating the entire user journey. When done right, UAT:

- Uncovers Hidden Gems: Users reveal unexpected use cases, suggesting enhancements that transform good software into exceptional solutions.

- Builds User Confidence: A smooth UAT reassures users that their needs matter. It fosters trust and loyalty.

- Saves Costs and Reputation: Early defect detection prevents costly post-launch fixes and safeguards your brand’s reputation.

So, let’s embark on this UAT journey together. Buckle up—we’re about to explore the uncharted territories where software meets reality, where glitches become opportunities, and where users reign supreme. 🚀

Understanding User Acceptance Testing (UAT)

User Acceptance Testing (UAT), also known as application testing or end-user testing, plays a pivotal role in the software development lifecycle. Unlike other testing phases, UAT isn’t confined to sterile lab environments—it unfolds in the real world, where users interact with the software just as they would after deployment. Let’s delve into the nuances of UAT and explore why it’s a critical piece of the puzzle.

Common Mistakes in User Acceptance Testing (UAT)

User Acceptance Testing (UAT) is the final frontier before software goes live. It’s where the rubber meets the road, and end-users take center stage. However, even with the best intentions, UAT can go awry if we don’t tread carefully. Let’s explore some common pitfalls and their repercussions:

1. Inadequate Planning

- Consequences:

- Scope Creep: Without thorough planning, UAT scope can balloon unexpectedly. New features sneak in, and suddenly, the testing matrix expands exponentially. Exhausted testers struggle to cover everything, leading to incomplete coverage and missed defects.

- Rushed Timelines: Inadequate planning often results in tight schedules. UAT becomes a hurried process, leaving little time for comprehensive testing. Rushed testing leads to overlooked issues.

- Unprepared Testers: When planning is lacking, testers may not fully understand their roles. They might lack the necessary training or context, compromising the quality of their testing.

2. Poor Communication

- Consequences:

- Misaligned Expectations: Lack of clear communication between stakeholders, testers, and developers can lead to misaligned expectations. Everyone assumes different things about the software’s behavior, resulting in confusion during testing.

- Missed Requirements: Poor communication can cause critical requirements to slip through the cracks. Testers might focus on the wrong areas, neglecting essential functionalities.

- Inefficient Feedback Loop: Without effective communication channels, feedback loops break down. Testers struggle to report defects, and developers remain unaware of critical issues.

3. Insufficient Test Environment

- Consequences:

- Production Surprises: UAT occurs in a production-like environment, but if it doesn’t mirror the actual production setup, surprises await. Differences in databases, configurations, or network conditions can lead to unexpected behavior.

- Missed Compatibility Issues: Inadequate replication of the production environment means compatibility issues might slip by unnoticed. The software might work flawlessly in UAT but fail miserably in the real world.

- Data Migration Nightmares: If data migration isn’t thoroughly tested, you risk migrating inaccurate or incomplete data. Imagine launching a new CRM system with outdated customer records—chaos ensues.

4. Limited User Involvement

- Consequences:

- Blind Spots: When only a select group of users participates, you miss out on diverse perspectives. Outliers—those with unique workflows or accessibility needs—remain unrepresented. Their feedback is crucial for uncovering blind spots.

- Risk of Rejection: Limited user involvement increases the risk of user rejection post-launch. If users feel excluded from the testing process, they might resist adopting the software.

- Missed Edge Cases: Users bring real-world scenarios to the table. Their creativity reveals edge cases that automated testing might overlook. Ignoring these scenarios can lead to post-launch surprises.

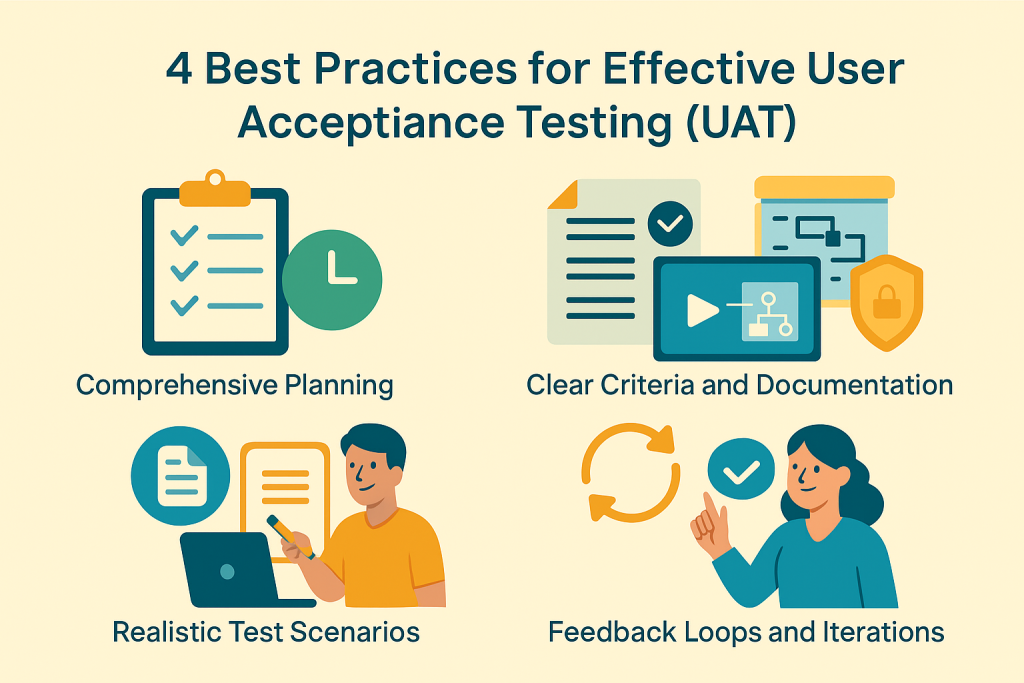

Best Practices for Effective User Acceptance Testing (UAT)

User Acceptance Testing (UAT) isn’t a mere formality—it’s the bridge between development and reality. To ensure successful UAT, consider these best practices:

1. Comprehensive Planning

Summary: Detailed planning is the cornerstone of effective UAT. Here’s why it matters:

- Test Strategy: Craft a robust test strategy that outlines UAT objectives, scope, and approach. Define roles and responsibilities—testers, stakeholders, and facilitators—all aligned with the project’s goals.

- Test Environment Setup: Prepare a production-like environment for UAT. Ensure it mirrors the actual deployment environment, including databases, configurations, and network conditions.

- Test Data Preparation: Populate the test environment with relevant data. Realistic data ensures that UAT scenarios mimic actual usage.

2. Clear Criteria and Documentation

Summary: Clarity is key. Establish precise testing criteria and maintain thorough documentation:

- Test Cases: Develop detailed test cases that cover all functionalities. Each test case should have clear steps, expected outcomes, and acceptance criteria.

- Exit Criteria: Define when UAT is complete. What constitutes a successful UAT? Document the acceptance criteria—whether it’s defect-free execution, performance benchmarks, or usability thresholds.

- Traceability Matrix: Create a traceability matrix linking requirements to test cases. This ensures that every requirement is validated during UAT.

3. Realistic Test Scenarios

Summary: UAT scenarios should mirror real-world usage. Here’s how to achieve realism:

- User Personas: Create user personas representing different roles (e.g., admin, customer, manager)—design scenarios based on their typical tasks.

- End-to-End Flows: Test end-to-end workflows, not just isolated features. Consider scenarios like user registration, order processing, or content creation.

- Edge Cases: Don’t shy away from edge cases—test scenarios where users push boundaries—invalid inputs, extreme data volumes, or unexpected interactions.

4. Feedback Loops and Iterations

Summary: UAT isn’t a one-shot deal. Iteration and feedback are essential:

- Early Involvement: Involve users early in the process. Gather their expectations, pain points, and preferences. Their insights shape UAT scenarios.

- Iterative Testing: Conduct multiple rounds of UAT. Each iteration refines the software based on user feedback. Fix defects promptly and retest.

- User Satisfaction Metrics: Beyond functional validation, measure user satisfaction. Surveys, feedback forms, and usability metrics provide valuable insights.

Remember, UAT isn’t just about ticking boxes; it’s about ensuring that your software delights users, meets business goals, and sails smoothly into production. 🌟

Conclusion: Navigating the UAT Waters

In our journey through User Acceptance Testing (UAT), we’ve explored the critical role it plays in project success. But let’s not forget the iceberg lurking beneath the surface—the common mistakes that can sink even the sturdiest UAT ship. As business leaders, decision-makers, and technology enthusiasts, we must steer clear of these pitfalls and embrace best practices for smooth sailing.

The Recap

- Inadequate Planning: We’ve seen how insufficient planning leads to scope creep, rushed timelines, and unprepared testers. Comprehensive planning is our lifeboat—it ensures that UAT stays on course.

- Poor Communication: The lack of clear communication can be our hidden reef. Misaligned expectations, missed requirements, and inefficient feedback loops await those who underestimate its impact. Let’s hoist the communication flag high.

- Insufficient Test Environment: Our UAT vessel must sail in a production-like sea. Replicating the actual environment prevents production surprises, compatibility issues, and data migration nightmares. Let’s set sail with realism.

- Limited User Involvement: The risk of user rejection looms large when we exclude our passengers—the end users. Their diverse perspectives, edge cases, and creativity are our compass. Let’s invite them aboard.

The Promise of Best Practices

Now, armed with best practices, we’re ready to chart a successful UAT course:

- Comprehensive Planning: Our detailed map ensures that UAT objectives align with business goals. The test environment mirrors reality, and testers are well-prepared.

- Clear Criteria and Documentation: Our compass points to clarity. Precise test cases, exit criteria, and traceability matrices guide us toward acceptance.

- Realistic Test Scenarios: We’ll navigate real-world waters. User personas, end-to-end flows, and edge cases keep us grounded.

- Feedback Loops and Iterations: Our sails catch the winds of iterative testing. Early user involvement and satisfaction metrics propel us forward.

Anchors Aweigh

As we wrap up, remember that UAT isn’t a mere checkbox—it’s the final validation before our software sets sail. By avoiding common mistakes and adhering to best practices, we ensure that our project reaches safe harbor. So, fellow navigators, let’s raise our UAT flags high, trim the sails, and embark on a voyage where success awaits. Fair winds and following seas! 🌊⚓